The Making of VR: An Inside Look with Developers, Part 2

From rendering optimization tricks to performance management, to covid logistic challenges— our devs share it all.

When we set out to make Star Wars™: Squadrons, we knew we wanted to offer the full pilot fantasy of being inside the starfighter cockpit with VR and HOTAs. Although the team had great experience building 2D games, our expertise in VR was still in its infancy (in this article, 2D refers to displaying the game on a TV screen, not actual sprite-based 2D). So, over the next years of development, we came through a fair amount of technical challenges that we managed to pull great learnings from. In this article, we share that development experience and present a few of the more interesting challenges we overcame to give you a sense of what it took to bring the VR experience to life. From rendering optimization tricks to performance management and covid logistic challenges, we'll share it all.

I. Rendering Optimization Tips and Tricks

Frame Rate & Resolution

On a technical level, our biggest VR challenge was to achieve a solid combination of frame rate and resolution, particularly on consoles where we are pushing the hardware to its limits. In any high visual fidelity video game, the player’s perception of quality hinges on the simulation running at a minimum of 30 FPS (ideally 60 or more) and 1080p resolution.

2D games have a decent tolerance on both frame rate and resolution, but in VR we’re not as lucky. Frame drops can cause motion sickness, eye fatigue, disorientation, and nausea, even if you have strong "VR legs" (expression used for people who have high resistance to motion sickness while using a VR headset).

In order to achieve 60 frames per second (the absolute minimum for a decent VR simulation), we needed each frame to be rendered in 16.6 milliseconds at most. In addition, VR simulation needs a separate frame to be rendered for each eye. The simplest approach would be to render two frames instead of one, but that would cut our rendering milliseconds budget in half in order to maintain the required 60 frames per second. As you will read next, there are a few things we can do to make a smarter usage of the hardware and avoid drawing twice as much in the same amount of time.

View-Independent Calculations

In order to produce a stereoscopic image (in other words, a “VR image”), we need to display the image as seen from each eye, and let the brain do its magic on the rest. As we know, each of our eyes sees the world in a slightly different way, owing to the fact that our eyes are in two separate locations. In a computer simulation however, not every rendering operation depends on the eye position and orientation. This creates an optimization opportunity where we “cache” the results of those calculations - do them for the first eye and reuse the results for the second eye. Calculations of shadows are a good example where this type of optimization applies, since those are independent of the viewer’s position and orientation (they rely mostly on objects and light sources position).

Shadows are rendered from the point of view of light sources. Sharing them reduces the overall frame time.

Smarter Calculations

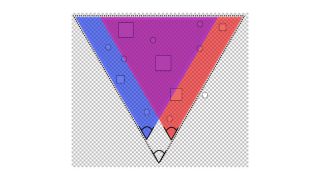

Another similar way to avoid the double GPU and CPU cost is to adjust certain calculations to be valid for both eyes at the same time. Take frustum culling for example (which consists essentially in deciding which world objects are “in view”), these calculations are expensive as they require traversing large spatial structures of 3D objects. While each eye technically has its own view frustum, both frustums are almost the same. Instead of doing the calculation twice, we did the calculation only once, using a new frustum volume that fully includes both eyes simultaneously. This approach does result in a few world objects being rendered unnecessarily in either left or right frame, but the saving of doing the culling only once largely offsets such a small overhead (at least, in our case!).

The frustums of both the left (blue) and right (orange) eyes are combined to create a bigger frustum that encompasses both views

Lens Culling

Unlike traditional 2D images displayed on a flat screen, VR headsets have their lens shaped in a way that accomodate our eyes being close to the screen. The shape of those lenses allow an optimization that is simply impossible to do in 2D. Internally, when rendering a VR image, the objects are still drawn to a flat 2D image, but when projected on the lens we can notice that the distortion applied makes parts of that 2D image impossible to see. We use this property to apply a simple culling mask to those outer edge pixels, thereby saving a significant amount of GPU power.

The black area around the lens isn’t visible from inside the VR headset

Forward Rendering

In VR, you need to effectively render many more pixels compared to 2D, not only because there are two eyes to render for, but also because each image is presented so close to the eye that it requires higher resolution in order not to appear pixelated or blurry. With this in mind, and considering the fact that writing to the G-buffer is quite expensive (more on deferred rendering and the g-buffer here or here), we found that deferred rendering did not scale well enough in VR and decided to fall back to the traditional forward-rendering method instead. This change turned out to have a pretty negligible impact on the visual fidelity, given that there aren’t that many lighting sources in a space combat game compared to an average action/adventure game.

We originally implemented this technique for PSVR only, but it worked so well that we decided to add it, as a patch, to the PC version, so that anyone who didn’t have one of those recent high-end GPU could still enjoy Star Wars Squadrons in VR with a better frame rate.

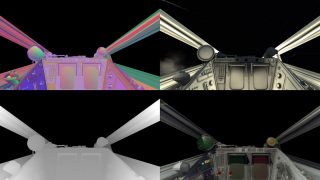

Part of our g-buffer layout used in 2d mode plus final image (bottom right)

Dynamic Resolution

Dynamic resolution is an extremely common optimization technique nowadays. It consists of the engine automatically and temporarily lowering the resolution to compensate for a few heavy frames. As long as the resolution does not become too low and for too long, the change in quality isn’t noticeable for most people.

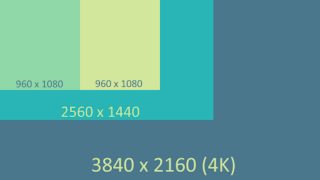

For technical reasons that go beyond the scope of this article, dynamic resolution was not implemented on PC, so only the console version could benefit from this feature. Ultimately, the gains from this technique were minimal when compared with 2D. In order to get the most benefit from this technique, we have to set the “default” resolution to something higher than the bare minimum, for example in 2D on PS4 we use 1080p as default and 900p as the lower bound (the engine will not drop below that limit). However, because of the resolution in PSVR on a regular PS4, we didn’t quite have the necessary wiggle room to make this technique fully beneficial, and resolution drops are a lot more noticeable when a screen is mere centimeters from the eyes. With that being said, we at least were able to get a pretty nice resolution boost on PS4 Pro and PS5 with dynamic resolution, thanks to the extra processing power available on both consoles.

A subset of supported resolutions. From full 4K (2D mode) to 960x1080 (PSVR, per eye)

Some optimizations are promising on paper but as a software developer you always have to think how much code you will have to rewrite, what other systems may be affected and how much risk it can carry. Much like doing a major renovation project in a house, major changes are rarely just plug-and-play. You have to tear down nearby elements, and be ready for surprises.

What About Other Tricks?

There were other techniques we intended to look into, such as foveated rendering but ultimately we were too far ahead in the project to make such a major change, and needed to balance our efforts with other needs such as debugging and stabilization. Some optimizations are promising on paper but as a software developer you always have to think how much code you will have to rewrite, what other systems may be affected and how much risk it can carry. Much like doing a major renovation project in a house, major changes are rarely just plug-and-play. You have to tear down nearby elements, and be ready for surprises.

One other technique that seemed promising, but was also abandoned due to how much rework it would incur, was hybrid mono-rendering. The idea is that when an object is far enough from the eyes, there’s not much difference between what each eye “sees” (try it at home by blinking your left and right eye successively). This has been known forever in video games, as you can see in parallax effects in SNES/Genesis games, where objects scrolling slower in the background and faster in the foreground produce an illusion of depth. The same idea might have applied in a VR game where objects only need to be drawn once for both eyes when appearing at a certain distance. As simple as the idea may seem, its implementation presented too much challenge in a rendering pipeline that wasn’t designed with this algorithm in mind. Not to mention the fact that as with most optimization, you can never predict the gains with 100% accuracy, and this change may or may not have been worth it.

Optimizing Star Wars: Squadrons was a constant effort during at least the last 12 months of the project, involving several programmers, technical artists and quality assurance specialists. During that time, we implemented other optimizations, many of which consisted in improving the core parts of the engine to make it more suited to handle the additional workload VR requires. Without going into detail these optimizations included:

- Multithreading changes to avoid CPU cores sitting idle

- Quality tweaks (number of active AI, rates of fire, etc)

- More quality tweaks (turning certain visual effects off in VR only, whenever the loss of quality was an acceptable tradeoff with the performance gain)

Despite our many optimizations, many objects and effects still need to be rendered twice in VR. There is also a fair amount of extra load on the CPU side, such as preparing that second frame, as well as other “under the hood” processing that is specific to VR, such as head tracking, frame reprojection, camera updates, UI and 3D audio. Had Squadrons been a VR-only title, we would have had more freedom to respect stricter assets budgets, however we needed to maintain parity between the 2D and the VR experiences. Not only because our game has multiplayer, and thus parity is a requirement, but most importantly it has always been a part of our vision for VR to not just be a subset of the game but truly offer the same full experience as in 2D.

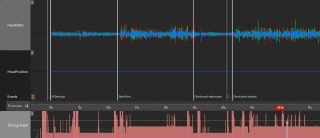

Monitoring of head tracking updates under heavy load

Visual Fidelity: Managing 2D/VR Parity

Star Wars: Squadrons is rather unique in that it supports both VR and 2D for the entire game. We’re not the only title that does this, but most games either have a small VR-enabled mode, or are just VR-only games. Keeping our commitment to deliver a great experience in both 2D and VR was a significant technical challenge and next, we’ll share key aspects on how we accomplished that visual fidelity. We have an entire article covering all the design aspects of building a 2D+VR game here, we recommend the read!

Those who have played it in VR know that Squadrons is pretty intense as far as VR games are concerned. Knowing this from the get-go, we ensured that toggling VR on and off would be possible at any time in the title. If you ever become physically sick (e.g. nauseous), and you are in the middle of an important Fleet Battle match, you do not want to have to restart your game! This design constraint meant that both modes had to be supported in the same executable, and our code could never make any assumption about which mode was active. In the realm of game optimization, assumptions are usually extremely useful, for example, you can skip certain memory allocations, not load certain shaders, etc. In our case though, everything was built such that the rendering mode (VR or 2D) could be changed at any time. In particular, both modes had to run off the same set of data and all the visual fidelity adjustments were dynamic at run-time.

One good example is our lighting system as we are using a completely different lighting model in VR. That means we could tweak and/or remove features without impacting the look of the non-VR version. Did you notice that there is almost no specular contribution when playing the game at the lowest quality settings?

To help with the fact that the resolution is lower, we implemented a sharpening pass just at the end of the rendering pipeline. That greatly helped remove some of the blurriness. Our UI being mostly in-world (compared to a more classic 2D HUD), that blurriness was greatly affecting the quality of the text. Our UI and UX teams had to experiment with different fonts, font size, color, and contrast to make sure the text is legible at all times. That ended up being quite a challenge, but we think the emphasis on having everything in-world greatly helped getting the feeling of presence.

II. Logistical Challenges

Performance Tracking

Besides implementation aspects, there’s a lot of preliminary data gathering and analysis involved in optimizing a game. Not only do you need to know when and where your game’s performance is low, you also need reliable before-and-after measurements to properly assess optimization results in a real-world environment.

The first application to collecting consistent performance data is accounting for the natural variability in a 5v5 multiplayer game with ship selection, customization, map, ship outfits, play style, etc. Next, we look at the combinations of platforms to test on. This article focuses on VR but keep in mind that there is also the Xbox One, Xbox One X, PS4, PS4 Pro, PSVR+PS4, PSVR+PS4 Pro- and of course PC, with its multitude of hardware.

Another consideration in collecting data is the heavy logistics it can require to manage having 10 testers available on a daily basis.

While in development, maintaining consistent stable builds can be tricky, and for performance tracking, this means we might not get new performance data daily. As a team, we had to be disciplined about game stability in order to prevent being impacted by this problem too frequently.

Then comes the question of what to do with the data. This data is real-time performance metrics, captured 60 times per second for 10 players over a half hour- and sometimes you find noise, new bugs, user errors, etc. To help break down, analyze and visualize the information we developed a small web-based portal to track specific high-level values over time, such as the overall CPU and GPU frame duration. It was a simple and rudimentary ad-hoc tool that proved helpful,while other tools were available when more detailed data was required (which was: often!).

Excerpt of our simple and rudimentary high-level performance report

Hardware Logistics and Working From Home

In March of 2020, our team wasn’t any more prepared than the rest of the world for what was to come. The pandemic escalated very quickly, and we were all notified to stay at home with pretty short advance notice. Everyone switched to work from home, using a program that allowed us to use a computer remotely. This way of working was fine for a certain type of work, but as far as VR was concerned, this was a huge blocker.

We swiftly regrouped and decided to start shipping work PCs to people at home along with any required hardware such as console devkits and of course, VR headsets. Back then, we felt this was an aggressive measure but we wanted to take no chances, just in case the shut down would last for longer than the initially expected 2-4 weeks.

While some studio members were waiting on material at home, we added a special debug command to the game that would toggle “VR quality” rendering in 2D. It certainly wasn’t perfect, but it allowed artists to check their LOD settings, lighting quality and other effects despite not having a VR headset available to them yet.

As the days and the weeks went by, it became progressively clearer that this working from home situation was not going to be just a short term challenge. We continued to send hardware to people (and our facilities team became quite efficient at doing so), but we soon hit a different problem where people started running out of space to put this hardware, and ran into power supply and room temperature issues- another piece to consider in this big puzzle. VR headsets certainly made this problem worse, since most come with cameras, sensors, wires, and require a full room set-up in certain cases. At some point, we joked about having to purchase drills to build setups. Not exactly the kind of hardware you expect for a video game studio!

Of course there were some new advantages to working from home, as we could rest on our beds and couches nearby when we needed to recover from motion sickness (which a work-in-progress VR game gives you a lot of!).

Working with a Wide Array of Headsets

Over time, our ambition grew. VR was shaping up great, and this game being a love letter to Star Wars fans, the team wanted to make the game accessible to as many VR owners as possible. When our marketing campaign started, it generated a lot of interest with the public and with VR owners in particular. At that point we started to realize that our VR audience was using a broad range of headsets, many of which were not readily available to us at the time. We responded by reaching out to various manufacturers, whose response was generally fantastic. Most of them sent us some hardware to test on, we even were given access to pre-release models, and some manufacturers even offered to give us hands-on programming support. It was amazing to see that kind of positive response. (on a similar note, our experience with HOTAS was relatively similar, but we’ll keep that for another article)

The big shift in scope came when we decided to add Steam and OpenVR support to our title, and opening a Pandora's box individual headset specificities and quirks in how it adheres to the OpenVR standard.

On this note especially, we thank our VR community for their feedback and patience in pre and post launch that helped us improve on the game.

It was a real challenge, but we’re glad that we could support so many headsets. The number of players that ended up experiencing the game in VR went beyond our wildest hopes.

Conclusion

Star Wars: Squadrons was meant to be playable in VR, and we’re happy that we had the opportunity to bring this version of the game to the world. It came with its challenges, whether it be technical or organisational, but once again our team overcame them with brio. To see an overwhelmingly positive reaction from the community showed us that it was definitely the right decision, and that all that work was worth the effort. For many of us, being a starfighter pilot was a childhood dream, so being able to make it happen by building the necessary technology (our other passion!), made the realization of that dream even more rewarding.

Lucasfilm, the Lucasfilm logo, STAR WARS and related properties are trademarks and/or copyrights, in the United States and other countries, of Lucasfilm Ltd. and/or its affiliates. © & ™ 2021 Lucasfilm Ltd. All rights reserved.

About the Authors

Keven Cantin

Keven Cantin is the Lead Senior Software Developer of the Core Tech team on Star Wars: Squadrons. He finds it weird to write something in third person, especially when talking about a first-person VR game.

Julien Adriano

Julien Adriano is the Project Technical Director on Star Wars: Squadrons. He takes no credit for any of the cool stuff above but he was always there to support Keven and his team on this crazy adventure.